Learning to dance: A graph convolutional adversarial network to generate realistic dance motions from audio

Computers & Graphics

Abstract

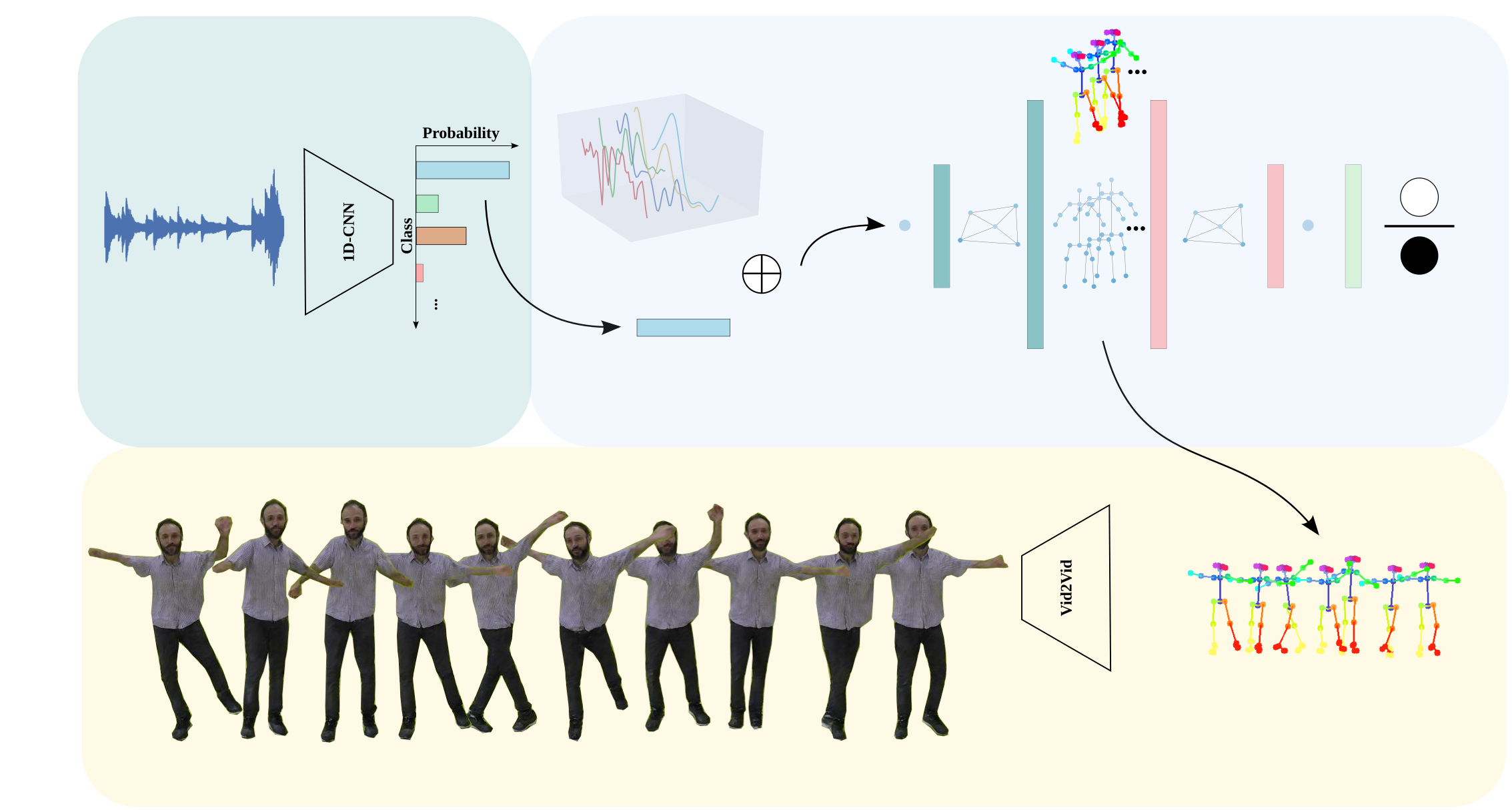

Synthesizing human motion through learning techniques is becoming an increasingly popular approach to alleviating the requirement of new data capture to produce animations. Learning to move naturally from audio, and in particular to dance, is one of the more complex motions humans often perform effortlessly. Each dance movement is unique, yet such movements maintain the core characteristics of the dance style. Most approaches addressing this problem with classical convolutional and recursive neural models undergo training and variability issues due to the non-Euclidean geometry of the motion manifold structure.In this paper, we design a novel method based on graph convolutional networks to tackle the problem of automatic dance generation from audio information. Our method is capable of generating natural motions, preserving the music style key moves across different generated motion samples, by taking advantage of an adversarial learning scheme conditioned on the input music audios. We evaluate our method using a user study and with several quantitative metrics of the generated motions' distributions. The results suggest the proposed GCN model outperforms the current state of the art dance generation method conditioned on music in different experiments. Moreover, our graph-convolutional approach is simpler, easier to be trained, and generates more realistic motion styles regarding qualitative and different quantitative metrics than state of the art, and with a visual movement perceptual quality even comparable to real motion data.

Paper

-

Learning to dance: A graph convolutional adversarial network to generate realistic dance motions from audio

João P. Ferreira, Thiago M. Coutinho, Thiago L. Gomes, Jośe F. Neto, Rafael Azevedo, Renato Martins and Erickson R. Nascimento. Learning to dance: A graph convolutional adversarial network to generate realistic dance motions from audio, Elsevier Computers and Graphics, C&A, 2020.

PDF, BibTeX@article{ferreira2020cag,

author={João P. Ferreira and Thiago M. Coutinho and Thiago L. Gomes and José F. Neto and Rafael Azevedo and Renato Martins and Erickson R. Nascimento},

title = {Learning to dance: A graph convolutional adversarial network to generate realistic dance motions from audio},

journal = {Computers & Graphics}

volume = {94},

pages = {11 - 21},

year={2021},

issn={0097-8493}

doi={https://doi.org/10.1016/j.cag.2020.09.009}

}

Demo

Code

[PyTorch]

Data

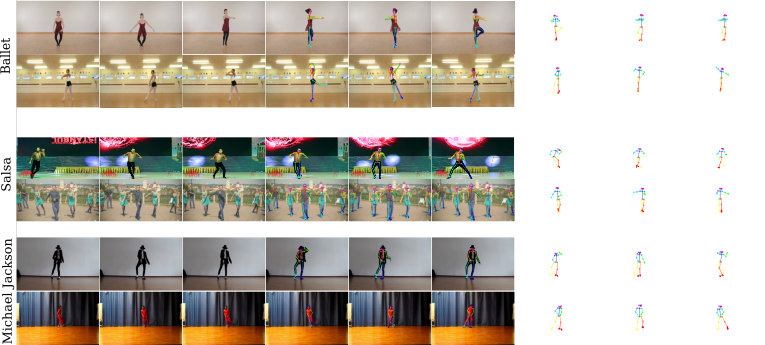

We build a new dataset composed of paired videos of people dancing different music styles. The dataset is used to train and evaluate the methodologies for motion generation from audio. We split the samples into training and evaluation sets that contain multimodal data for three music/dance styles: Ballet, Michael Jackson, and Salsa. These two sets are composed of two data types: visual data from careful-selected parts of publicly available videos of dancers performing representative movements of the music style and audio data from the styles we are training. The Figure above shows some data samples of our dataset.

Concurrent work

Concurrently and independently from us, a number of groups have proposed closely related — and very interesting! — methods for dance generation from music. Here is a partial list:

- Xuanchi Ren, Haoran Li, Zijian Huang, Qifeng Chen. Music-oriented Dance Video Synthesis with Pose Perceptual Loss

- Ruozi Huang, Huang Hu, Wei Wu, Kei Sawada, Mi Zhang, Daxin Jiang. Dance Revolution: Long-Term Dance Generation with Music via Curriculum Learning

- Jiaman Li, Yihang Yin, Hang Chu, Yi Zhou, Tingwu Wang, Sanja Fidler, Hao Li. Learning to Generate Diverse Dance Motions with Transformer

- Zijie Ye, Haozhe Wu, Jia Jia, Yaohua Bu, Wei Chen, Fanbo Meng, Yanfeng Wang. ChoreoNet: Towards Music to Dance Synthesis with Choreographic Action Unit

- Hsin-Ying Lee, Xiaodong Yang, Ming-Yu Liu, Ting-Chun Wang, Yu-Ding Lu, Ming-Hsuan Yang, Jan Kautz. Dancing to Music

Acknowledgements

This work was supported, in part, by Nvidia, Cnpq, Capes and Fapemig. We use as template for this webpage the page of Speech2Gesture